I am certainly a technology enthusiast. My home is littered with computers and other electronic devices. Unfortunately, these often require attention, because neglected devices lead to problems. Doing this manually would quickly turn into hours of tedious inspection, configuration, and documentation, and that sound super boring. I’ve used Ansible for many years to do this at home, and it’s about time I wrote up how I use it.

Ansible is free and open source configuration management software, powered by Python, ssh, and YAML. The core is simple, but powerful. Unless you shell out some cash for Tower, it’s still manual.

My Solution

Enter ansible-docker, an image I created for automated Ansible execution using GitLab CI.

- Docker Hub rveach/ansible

- GitLab Source: rveach/ansible-docker

While you can pull it and run it interactively, it’s really meant for use in CI/CD environments. To use it, just create a separate repo, and structure it in this manner. The integration takes place in the .gitlab-ci.yml file.

playbooks/ # pull all ansible playbook stuff here

site.yml # or any other file

group_vars/ # with group_vars and roles

roles/ # just do whatever you've configured

# follow standard ansible best practices

ssh/ # ssh info

id_rsa # private key

id_rsa.pub # keep the public key here too

known_hosts # known hosts file

config # ssh config file

hosts # hosts file

ansible.cfg # ansible.cfg file

.gitlab-ci-yml # define CI jobs here

SSH Security

When performing configuration management over SSH, the remote user must be either privileged or have the ability to perform critical tasks as a privileged user. This is not to be taken lightly. In my environments, I do not allow SSH in through my firewall. Instead, I’ve installed a GitLab runner behind my firewalls in each location.

In addition to network security, this image allows you to include your own ssh keys, known_hosts file and ssh config file.

- The private key file name will default to id_rsa, but can be overridden with the environment variable

$SSH_KEY_NAME. - The private key file can be password protected, just supply the environment variable

$SSH_KEY_PASSPHRASEto decrypt. - The known_hosts file can be populated populated for each of your hosts using the output of

ssh-keyscan -H $REMOTE_HOST. Alternatively, you can sethost_key_checking = Falsein the ansible.cfg if you don’t care to validate host keys. - …and of course, the ssh config file can be set up in any way necessary.

You may want to look into other standard ways of securing SSH, like this one on nixCraft: Top 20 OpenSSH Server Best Security Practices

Ansible Vault

When using sensitive information in an Ansible playbook, it’s highly recommended to use Ansible Vault to encrypt those contents. The wrapper script has functionality to read a vault password from either the environment variable $VAULT_PASSWORD (recommended) or the command line argument --vault-password(not recommended for security reasons).

Home Servers

deploy-homeservers:

stage: deploy

image: rveach/ansible:latest-arm

script:

- /usr/local/bin/run.py --playbooks site_homeservers.yml

only:

- master

- homeservers

except:

variables:

- $ANSIBLE_PLAYBOOK_LIST

tags:

- armhf

- homelabDigital Ocean Droplets

deploy-droplets:

stage: deploy

image: rveach/ansible:latest

script:

- /usr/local/bin/run.py --playbooks site_droplets.yml

only:

- master

- droplets

except:

variables:

- $ANSIBLE_PLAYBOOK_LIST

tags:

- dropletDynamic Deploy

deploy-dynamic:

stage: deploy

image: rveach/ansible:latest-arm

script:

- /usr/local/bin/run.py --playbooks ${ANSIBLE_PLAYBOOK_LIST}

only:

- master

except:

variables:

- $ANSIBLE_PLAYBOOK_LIST == null

tags:

- armhf

- homelabThese jobs provide flexibility in a few ways:

- Deploy Dynamic is executed when a playbook list is passed through an optional variable. This is mainly used when triggering CI tasks from other projects via the external API.

- The other tasks run for master, but can also be set up to run from other branches. I use this to speed up the feedback loop when actively working on a role.

- Note: I’m also pulling two different tags for the image. Until I spend some time to configure a multi-arch build, separate tags are needed for amd64 and arm. I just happen to use a dedicated Raspberry Pi 3B+ for my GitLab Runner at home.

In Use:

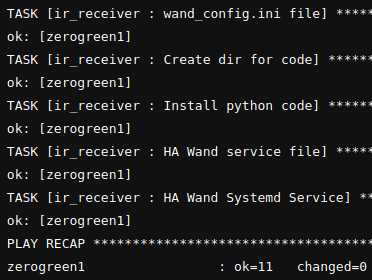

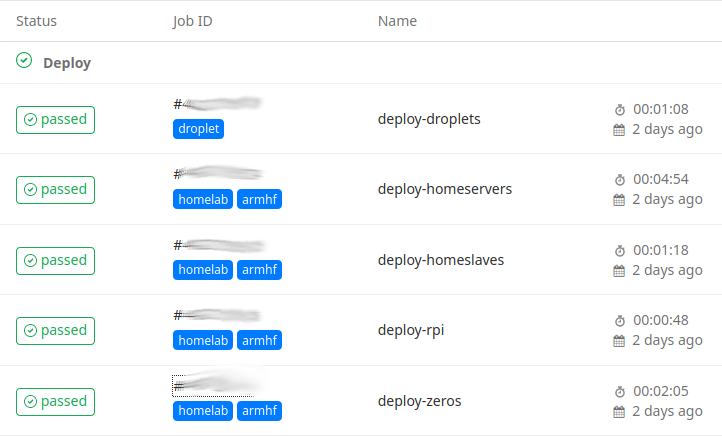

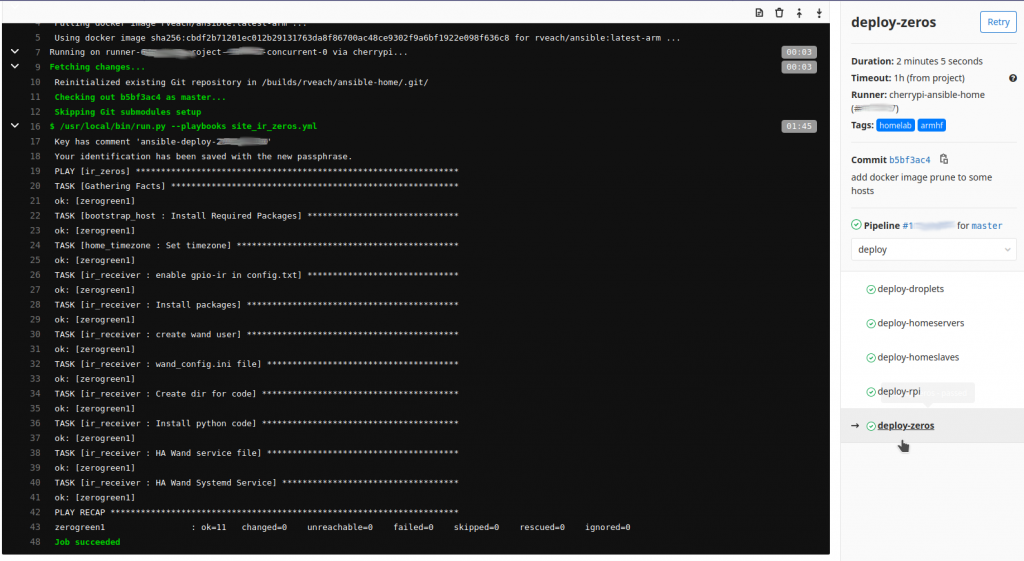

Now, whenever I push to the repo, I know that Ansible will execute, and I rely on GitLab’s notifications to let me know when there has been a failure.

Should any individual job fail, the log is also available:

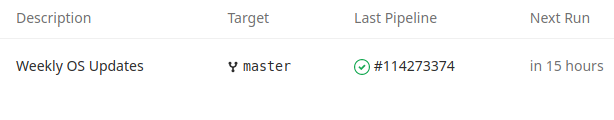

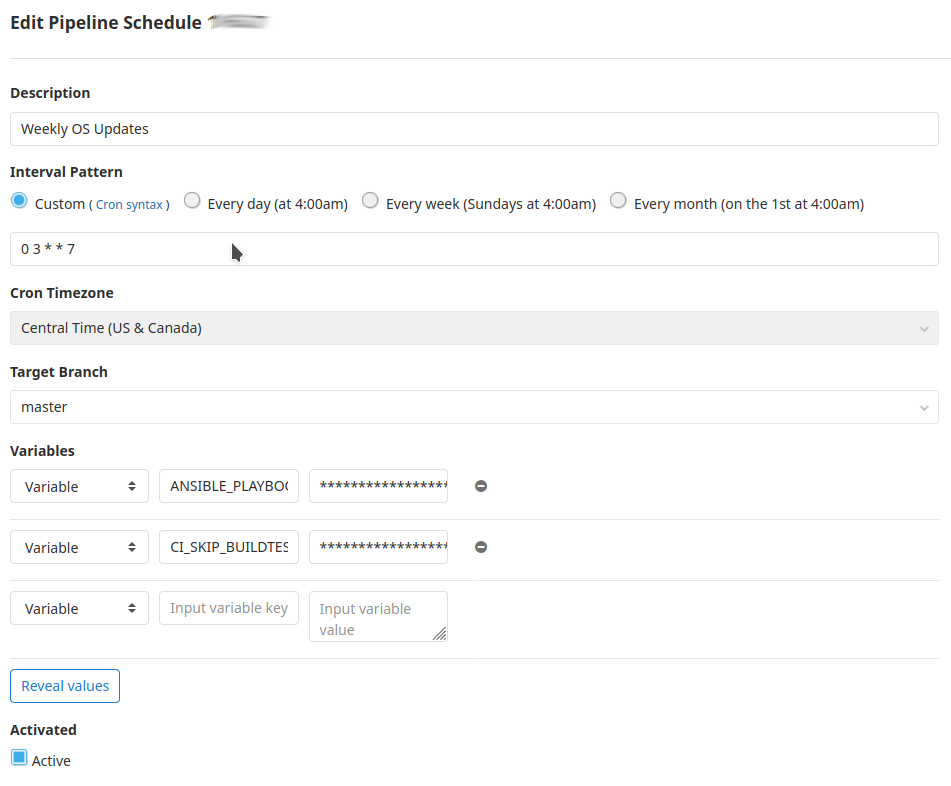

Scheduling Jobs

Of course, updates on repo push is only half the story. Any well managed environment should have configuration deployed on a schedule to automate updates and prevent configuration drift. Luckily, GitLab CI allows you to schedule pipelines.

In conclusion, it took me a bit of time to work out all the kinks on this, but once completed, it’s been incredibly smooth. In writing this, I hope others can benefit from my work or find inspiration to create their own solution.

Cover image by Harrison Broadbent